Introduction

OpenELB is an exciting addition to the Kubernetes ecosystem, offering a powerful open-source load balancer solution for managing traffic within Kubernetes clusters. In this blog post, we'll dive into the world of OpenELB, exploring its features, architecture, and how it can benefit your Kubernetes deployments.

What is OpenELB? - OpenELB, short for Open Elastic Load Balancer, is a cloud-native, software-defined load balancer designed specifically for Kubernetes environments. It provides advanced load balancing capabilities to distribute incoming traffic across your Kubernetes pods, ensuring high availability, scalability, and reliability for your applications.

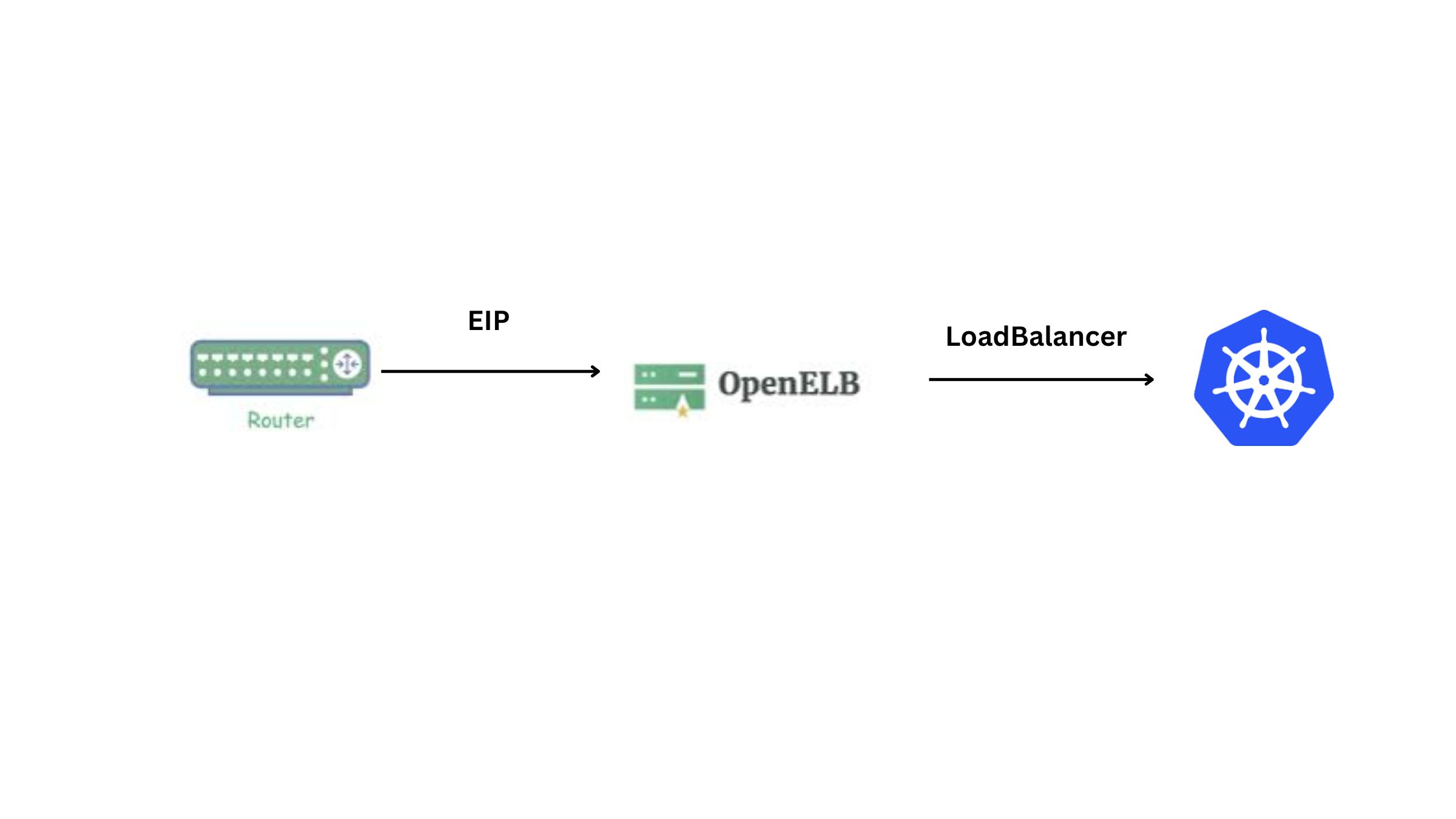

- In cloud-based Kubernetes clusters, Services are usually exposed by using load balancers provided by cloud vendors. However, cloud-based load balancers are unavailable in bare-metal environments. OpenELB allows users to create LoadBalancer Services in bare-metal, edge, and virtualization environments for external access, and provides the same user experience as cloud-based load balancers.

Core Features - ECMP routing and load balancing

- BGP mode and Layer 2 mode

- IP address pool management

- BGP configuration using CRDs

Key Features of OpenELB:

Dynamic Load Balancing: OpenELB dynamically routes traffic to healthy pods based on predefined rules and policies, ensuring efficient utilization of resources.

Scalability: With support for horizontal scaling, OpenELB can handle increased traffic loads by automatically adding or removing load balancer instances as needed.

High Availability: OpenELB ensures high availability by automatically detecting and redirecting traffic away from failed or unhealthy pods, minimizing downtime.

Layer 4 and Layer 7 Load Balancing: OpenELB supports both Layer 4 (TCP/UDP) and Layer 7 (HTTP/HTTPS) load balancing, allowing you to optimize traffic routing based on application requirements.

Integration with Kubernetes: OpenELB seamlessly integrates with Kubernetes, leveraging Kubernetes services and resources for easy configuration and management.

Customization and Extensibility: OpenELB provides a flexible architecture that allows for customization and extension through plugins and custom configurations.

Architecture of OpenELB OpenELB follows a modular architecture, consisting of the following components

Controller: - The Controller serves as the brain of the OpenELB system. It manages the configuration, monitoring, and orchestration of load balancer instances.

-It interacts with the Kubernetes API server to gather information about services, endpoints, and pods within the cluster.

-The Controller continuously monitors the health and availability of backend pods and dynamically updates the configuration of load balancer instances based on changes in the cluster.

Load Balancer Instances: -Load Balancer Instances are responsible for receiving incoming traffic and distributing it to backend pods.

-They can be deployed as independent instances or as part of a pool of load balancer nodes, depending on the scale and traffic requirements of the cluster.

-Each load balancer instance runs the necessary software components to perform traffic routing and load balancing, such as a reverse proxy or load balancing algorithm.

Service Discovery: - OpenELB integrates with Kubernetes service discovery mechanisms to dynamically discover backend pods and their associated endpoints.

- It leverages Kubernetes Services to expose applications running in the cluster, allowing them to be accessed through a stable DNS name or IP address.

- Service Discovery ensures that OpenELB always has up-to-date information about the available backend pods and their network addresses.

Health Checking:

- Health Checking is an essential component of OpenELB responsible for monitoring the health and responsiveness of backend pods.

- It periodically sends health checks to backend pods to verify their availability and performance.

- If a backend pod becomes unhealthy or unresponsive, Health Checking notifies the Controller, which takes appropriate action to reroute traffic away from the problematic pod.

Data Store (Optional): - In some deployments, OpenELB may utilize a data store to store configuration information, session state, or other metadata.

- Common data store choices include key-value stores like etcd or distributed databases like Cassandra.

- The Data Store provides a centralized repository for storing and retrieving information critical to the operation of OpenELB.

Install OpenELB on Kubernetes

Prerequisites

You need to prepare a Kubernetes cluster, and ensure that the Kubernetes version is 1.15 or later

OpenELB is designed to be used in bare-metal Kubernetes environments. However, you can also use a cloud-based Kubernetes cluster for learning and testing.

My Home Cluster

root@kmaster:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kmaster Ready control-plane 95m v1.26.0 172.16.16.100 <none> Ubuntu 22.04.1 LTS 5.15.0-58-generic containerd://1.6.28

worker1 Ready <none> 75m v1.26.0 172.16.16.101 <none> Ubuntu 22.04.1 LTS 5.15.0-58-generic containerd://1.6.28

worker2 Ready <none> 75m v1.26.0 172.16.16.102 <none> Ubuntu 22.04.1 LTS 5.15.0-58-generic containerd://1.6.28

Install OpenELB Using Helm

Log in to the Kubernetes cluster over SSH and run the following commands

root@master:~# helm repo add kubesphere-stable https://charts.kubesphere.io/stable

"kubesphere-stable" has been added to your repositories

root@master:~# helm repo update

root@master:~# kubectl create ns openelb-system

namespace/openelb-system created

root@master:~# helm install openelb kubesphere-stable/openelb -n openelb-system

NAME: openelb

LAST DEPLOYED: Mon Feb 26 10:15:28 2024

NAMESPACE: openelb-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The OpenELB has been installed.

Run the following command to check whether the status of openelb-manager is READY: 1/1 and STATUS: Running. If yes, OpenELB has been installed successfully.

root@master:~# kubectl get po -n openelb-system

NAME READY STATUS RESTARTS AGE

openelb-admission-create-b9h89 0/1 Completed 0 113s

openelb-admission-patch-d77jx 0/1 Completed 0 113s

openelb-keepalive-vip-5k5pp 1/1 Running 0 75s

openelb-keepalive-vip-6p6zq 1/1 Running 0 75s

openelb-manager-8c8b9b89c-kdn2l 1/1 Running 0 113s

root@kmaster:~# kubectl get validatingwebhookconfiguration

NAME WEBHOOKS AGE

openelb-admission 1 7m47s

root@kmaster:~# kubectl get mutatingwebhookconfigurations

NAME WEBHOOKS AGE

openelb-admission 1 7m55s

root@kmaster:~# kubectl get crd |grep kubesphere

bgpconfs.network.kubesphere.io 2024-02-26T07:02:48Z

bgppeers.network.kubesphere.io 2024-02-26T07:02:48Z

eips.network.kubesphere.io 2024-02-26T07:02:48Z

Configuration

We have different use cases to configure OpenELB - Configure IP Address Pools Using Eip

- Configure OpenELB in BGP Mode

- Configure OpenELB for Multi-Router Clusters

- Configure Multiple OpenELB Replicas

I am using EIP to configure OpenELB on the Cluster

Configure IP Address Pools Using Eip

Currently, OpenELB supports only IPv4 and will soon support IPv6.

root@master:~#kubectl edit configmap kube-proxy -n kube-system

......

ipvs:

strictARP: true

......

root@master:~# kubectl rollout restart daemonset kube-proxy -n kube-system

root@master:~# cat eip.yaml

apiVersion: network.kubesphere.io/v1alpha2

kind: Eip

metadata:

name: eip-pool

spec:

address: 172.16.16.190-172.16.16.240

protocol: layer2

disable: false

interface: eth0

root@kmaster:~# kubectl apply -f eip.yaml

eip.network.kubesphere.io/eip-pool configured

Verify the Pool status

root@kmaster:~# kubectl get eip

NAME CIDR USAGE TOTAL

eip-pool 172.16.16.190-172.16.16.240 1 51

root@kmaster:~# kubectl get eip eip-pool -oyaml

apiVersion: network.kubesphere.io/v1alpha2

kind: Eip

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"network.kubesphere.io/v1alpha2","kind":"Eip","metadata":{"annotations":{},"name":"eip-pool"},"spec":{"address":"172.16.16.190-172.16.16.240","disable":false,"interface":"eth0","protocol":"layer2"}}

creationTimestamp: "2024-02-26T07:05:46Z"

finalizers:

- finalizer.ipam.kubesphere.io/v1alpha1

generation: 3

name: eip-pool

resourceVersion: "4809"

uid: d2b70a8a-04ae-4b1c-b8bd-d317d9ea67f1

spec:

address: 172.16.16.190-172.16.16.240

disable: false

interface: eth0

protocol: layer2

status:

firstIP: 172.16.16.190

lastIP: 172.16.16.240

poolSize: 51

ready: true

v4: true

Lets Deploy One application and expose it on Loadbalancer

root@kmaster:~# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

root@kmaster:~# cat svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

annotations:

lb.kubesphere.io/v1alpha1: openelb #to specify that the Service uses OpenELB

protocol.openelb.kubesphere.io/v1alpha1: layer2 #specifies that OpenELB is used in Layer2 mode

eip.openelb.kubesphere.io/v1alpha2: eip-pool #specifies the Eip object used by OpenELB

spec:

selector:

app: nginx

type: LoadBalancer

ports:

- name: http

port: 80

targetPort: 80

root@kmaster:~# kubectl apply -f nginx-deploy.yaml

root@kmaster:~# kubectl apply -f svc.yaml

root@kmaster:~# kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7f456874f4-knvht 1/1 Running 0 16m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx LoadBalancer 10.104.174.233 172.16.16.190 80:31282/TCP 15m

Verify that application is accessible on Assigned Load balancer IP

root@kmaster:~# curl 172.16.16.190

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

OpenELB vs MetalLB

MetalLB and OpenELB are both solutions for providing load balancing functionality in Kubernetes environments, but they have different approaches and features. Let's compare them based on various aspects

Architecture:

MetalLB: MetalLB is a Layer 2 and Layer 3 load balancer implementation for Kubernetes clusters. It uses standard routing protocols (e.g., ARP, BGP) to route traffic to services within the cluster. MetalLB operates as a control plane and a set of data plane components that interact with the network infrastructure to manage IP address allocation and load balancing.

OpenELB: OpenELB, or Open Elastic Load Balancer, is a software-defined load balancer designed specifically for Kubernetes environments. It operates as a Kubernetes-native load balancing solution, leveraging Custom Resource Definitions (CRDs) and controllers to manage load balancer resources within the cluster. OpenELB provides advanced load balancing features such as Layer 4 and Layer 7 load balancing, service discovery, and dynamic configuration updates.

Installation and Configuration:

MetalLB: MetalLB can be installed as a Kubernetes controller and speaker components using YAML manifests or Helm charts. It requires configuring address pools and routing protocols to allocate IP addresses and route traffic effectively.

OpenELB: OpenELB can be installed as a Kubernetes controller and speaker components using YAML manifests or Helm charts as well. It allows for configuring IP address pools and load balancing policies using Custom Resource Definitions (CRDs) and YAML configurations.

Features:

MetalLB: MetalLB primarily focuses on providing simple, network-level load balancing capabilities for Kubernetes services. It supports both Layer 2 and Layer 3 load balancing modes and allows for the allocation of IP addresses from specific address pools.

OpenELB: OpenELB offers a more comprehensive set of load balancing features tailored specifically for Kubernetes environments. It supports Layer 4 and Layer 7 load balancing, service discovery, dynamic configuration updates, integration with Kubernetes network policies, and more advanced traffic management capabilities.

Community and Adoption:

MetalLB: MetalLB has gained significant adoption within the Kubernetes community and is widely used for providing load balancing functionality in on-premises and bare-metal Kubernetes deployments.

OpenELB: OpenELB is a newer project compared to MetalLB but has been gaining traction as a Kubernetes-native load balancing solution. It is supported by KubeSphere, an open-source Kubernetes platform, and has been integrated into various Kubernetes distributions and platforms.

Flexibility and Customization:

MetalLB: MetalLB provides basic load balancing functionality with support for customizing IP address allocation and routing configurations. It is suitable for simple use cases and environments where network infrastructure integration is straightforward.

OpenELB: OpenELB offers more flexibility and customization options, allowing users to define complex load balancing policies, integrate with Kubernetes network policies, and implement advanced traffic management features.

In summary, MetalLB and OpenELB are both effective solutions for providing load balancing in Kubernetes environments, but they cater to different use cases and offer varying levels of features and functionality. The choice between MetalLB and OpenELB depends on your specific requirements, preferences, and the complexity of your Kubernetes deployment.